16. Special Topic: Simple Least Squares Regression in Matrix Form#

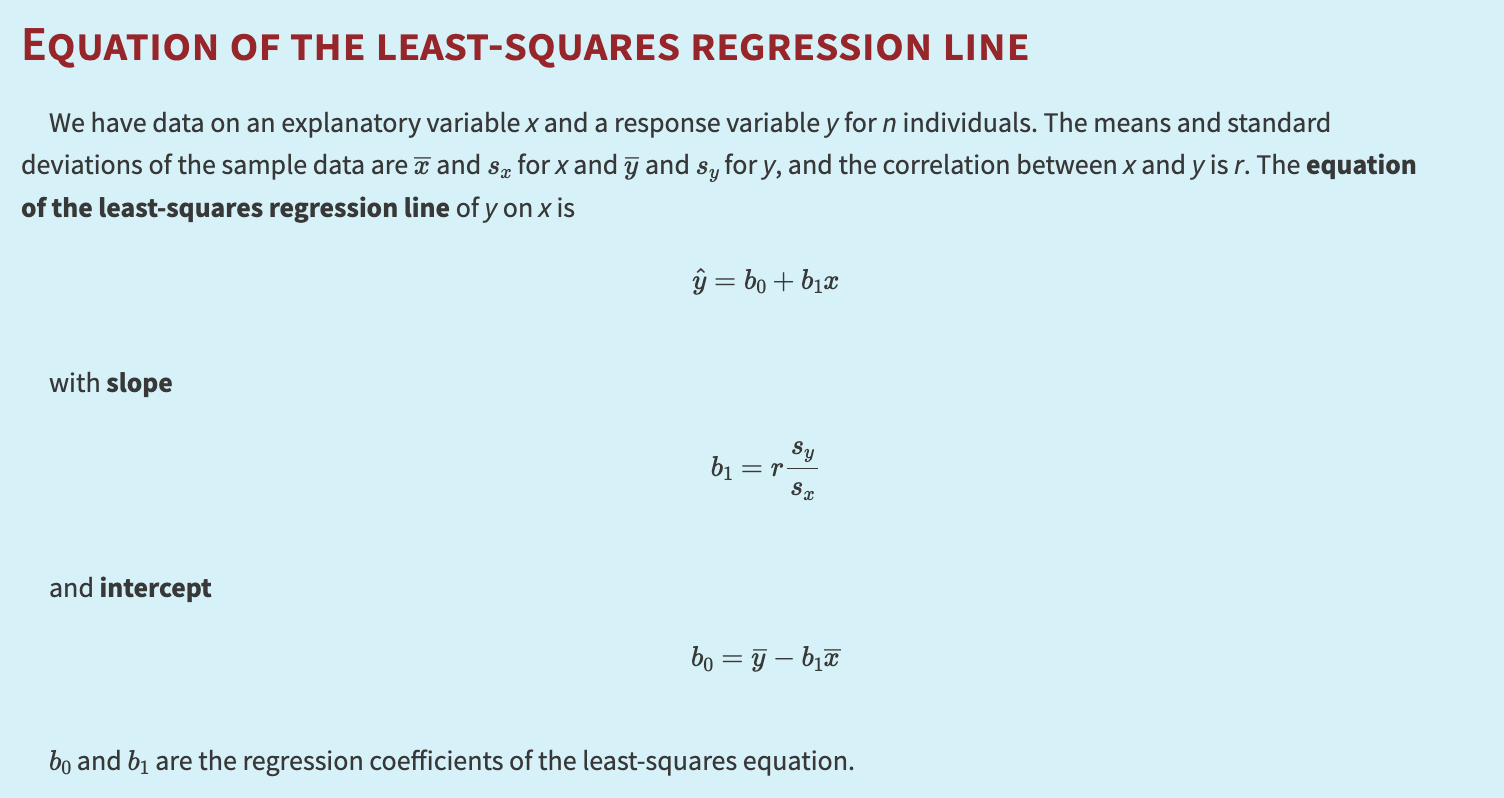

We have a data set consisting of \(n\) paired observations of the predictor/explanatory variable \(X\) and the response variable \(Y\), i.e., \((x_1, y_1), (x_2, y_2), \dots, (x_n, y_n)\). We wish to fit the model with a regression line:

where we have the assumptions:

\(\mathbb{E}[\epsilon_n \mid x_1, \dots, x_n] = 0\),

\(\text{Var}[\epsilon_n \mid x_1, \dots, x_n] = \sigma^2\), and

\(\epsilon_n\) is uncorrelated across measurements.

The parameters are \(\beta_0,\ \beta_1,\) and \(\sigma\).

16.1. The Matrix Representation#

Group all of the observations of the response into a single \(n\times 1\) column vector \(\mathbf{y}\):

Similarly, group the two coefficients into a single \(2 \times 1\) vector:

We also group the observations of the predictor variable:

This is an \(n \times 2\) matrix, where the first column is all 1’s (for the intercept) and the second column holds the actual \(X\) values. Then:

i.e., \(\mathbf{x}\beta\) is the \(n \times 1\) vector of fitted predictions. The matrix \(\mathbf{x}\) is often called the design matrix. Thus:

16.2. Mean Squared Error in Matrix Form#

At each data point, using the coefficients \(\hat{\beta}\) results in some error of prediction, so we have \(n\) such errors forming the vector:

When deriving the least squares estimator, we want to find \(\hat{\beta}\) that minimizes the mean squared error (MSE):

In matrix form:

To see this clearly,

16.3. Expanding the MSE Matrix#

Since \(\bigl(\mathbf{y}^\top \mathbf{x}\,\hat{\beta}\bigr)\) is a scalar, we have \(\mathbf{y}^\top \mathbf{x}\,\hat{\beta} = \hat{\beta}^\top \mathbf{x}^\top \mathbf{y}\). Thus:

16.4. Minimizing the MSE#

We first compute the gradient of \(MSE(\hat{\beta})\) w.r.t. \(\hat{\beta}\):

But \(\nabla\,\mathbf{y}^\top \mathbf{y} = \mathbf{0}\) (it’s constant in \(\hat{\beta}\)), so:

Setting this to zero:

Hence,

A very compact result!